Slurm config on Mistral has been updated to fix an issue related to memory use.

Issue

Prior the update, some Slurm jobs continue consuming the available memory (and even swap) of the allocated node and exceed the allocated memory

(set in sbatch or srun). If this occurs, it also affect other jobs/users.

Now?

Slurm jobs (Jupyterhub sessions) that exceed the allocated memory will be killed by Slurm. Jupyterhub session needs to be restarted.

How can I recognize that my session is stopped

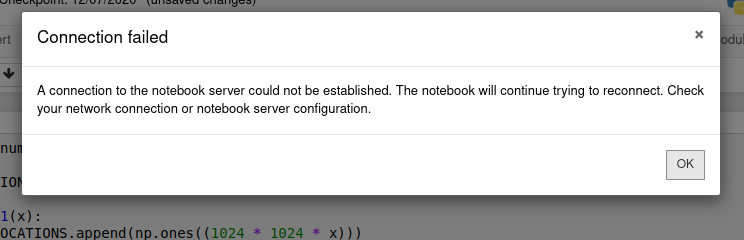

you will probably see a error message like this:

How to restart?

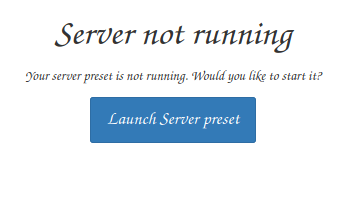

click again on Home

and then on Launch Server XXXX

Solution(s)

If the issue is related to memory, the obvious solution is to restart the Jupyterhub session with a higher memory. Either selecting a different profile if you are using preset or setting up the memry with --cpus-per-task/--mem.

HPC Blog

HPC Blog